Prototype holographic near-eye display system (© SCI).

A technology talk given by a team of researchers from Stanford Computational Imaging (SCI) Lab at SIGGRAPH 2022, reports on potential improvements to near-eye holographic displays.

Holographic near-eye displays offer unprecedented capabilities for virtual and augmented reality systems, including perceptually important focus cues. Although artificial intelligence-driven algorithms for computer-generated holography (CGH) have recently made much progress in improving the image quality and synthesis efficiency of holograms, these algorithms are not directly applicable to emerging phase-only spatial light modulators (SLM) that are extremely fast but offer phase control with very limited precision. The speed of these SLMs offers time multiplexing capabilities, essentially enabling partially coherent holographic display modes.

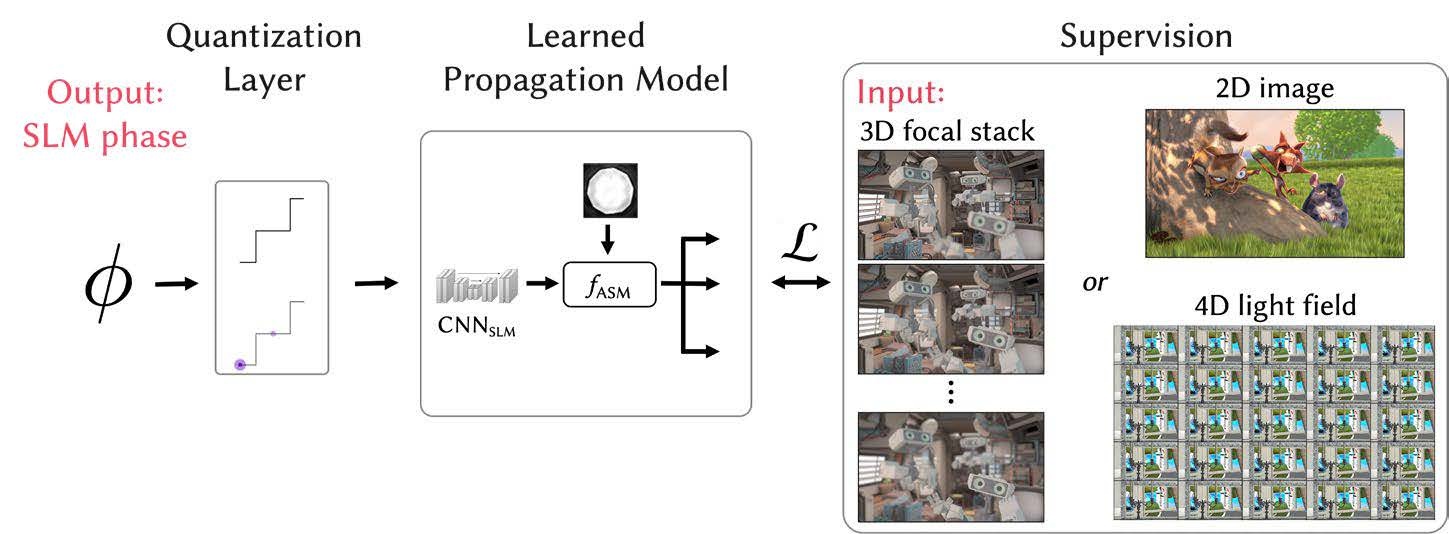

In their presentation, the team reported advances in camera-calibrated wave propagation models for these types of near- eye holographic displays, and described development of a CGH framework that robustly optimizes the heavily quantized phase patterns of fast SLMs. Their framework, according to the team, is flexible in supporting runtime supervision with different types of content, including 2D and 2.5D RGBD images, 3D focal stacks, and 4D light fields.

Using their framework, they demonstrated state-of-the-art results for all of these scenarios in simulation and experiment.

Unlike conventional displays which directly present desired intensities, holographic displays use exotic phase patterns for an SLM which modulates the ‘phase’ of light per pixel. The modulated wavefield propagates and reconstructs a 3D scene in a volume.

Illustration of the framework.

Illustration of the framework.The complex-valued field at the SLM is adjusted by several learnable terms (including discrete lookup table) and then processed by a CNN (Convolutional Neural Network). The resulting complex-valued wave field is propagated to all target planes using a wave propagation mean operator with amplitude and phase at the Fourier domain. The wave fields at each target plane are processed again by smaller CNNs. The proposed framework applies to multiple input forms, including 2D, 2.5D and 3D.

The method is flexible enough to use other types of input, such as 4D light fields.

Since the framework directly supervises the full light field, it is the only method that can reconstruct full, high-quality light field to date. Other methods either fail to reconstruct in high quality since they are not optimised, or any method that uses smooth target phases fail to reconstruct the full light field as it significantly sacrifices the degree to which the light spreads out.

The work presented at SIGGRAPH 2022 was published in the paper 'Time-multiplexed Neural Holography: A flexible framework for holographic near-eye displays with fast heavily quantized spatial light modulators' 1.